Single-Stage Visual Query Localization

in Egocentric Videos

(NeurIPS 2023)

Hanwen Jiang1 Santhosh Ramakrishnan1 Kristen Grauman1,2

1UT Austin 2FAIR, Meta

Paper | Code

|

Visual Query Localization on long-form egocentric videos requires spatio-temporal search and localization of visually specified objects and is vital to build episodic memory systems. Prior work develops complex multi-stage pipelines that leverage well-established object detection and tracking methods to perform VQL. However, each stage is independently trained and the complexity of the pipeline results in slow inference speeds. We propose VQLoC, a novel single-stage VQL framework that is end-to-end trainable. Our key idea is to first build a holistic understanding of the query-video relationship and then perform spatio-temporal localization in a single shot manner. Specifically, we establish the query-video relationship by jointly considering query-to-frame correspondences between the query and each video frame and frame-to-frame correspondences between nearby video frames. Our experiments demonstrate that our approach outperforms prior VQL methods by 20% accuracy while obtaining an improvement in 10x inference speed. VQLoC is also the top entry on the Ego4D VQ2D challenge leaderboard. |

Problem Definition

|

The goal is to search and localize open-set visual queries in long-form videos, jointly predicting the appearance time window and the corresponding spatial bounding boxes. |

Challenges

|

The challenges of the task arise from: i) the "needle-in-the-haystack" problem; ii) the diversity of open-set queries, and iii) no "exact" matching in the target videos. |

Method

|

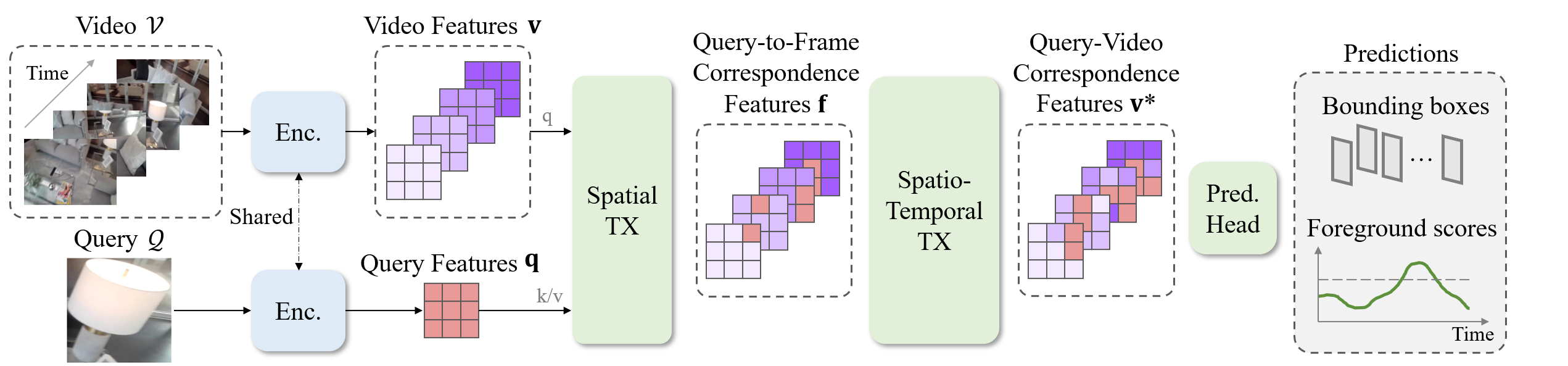

| Our model VQLoC estabishing a holistic undertsanding of query-video relationship, and predicts the results based on the understanding in a single shot. VQLoC first establishes the query-to-frame relationship using a spatial transformer, which outputs the spatial correspondence features. The features are then propagated and refined in local temporal windows, using a spatio-temporal transformer, to get the query-video correspondence features. Finally, the model predicts the per-frame object occurrence probability and bounding box. |

Results

|

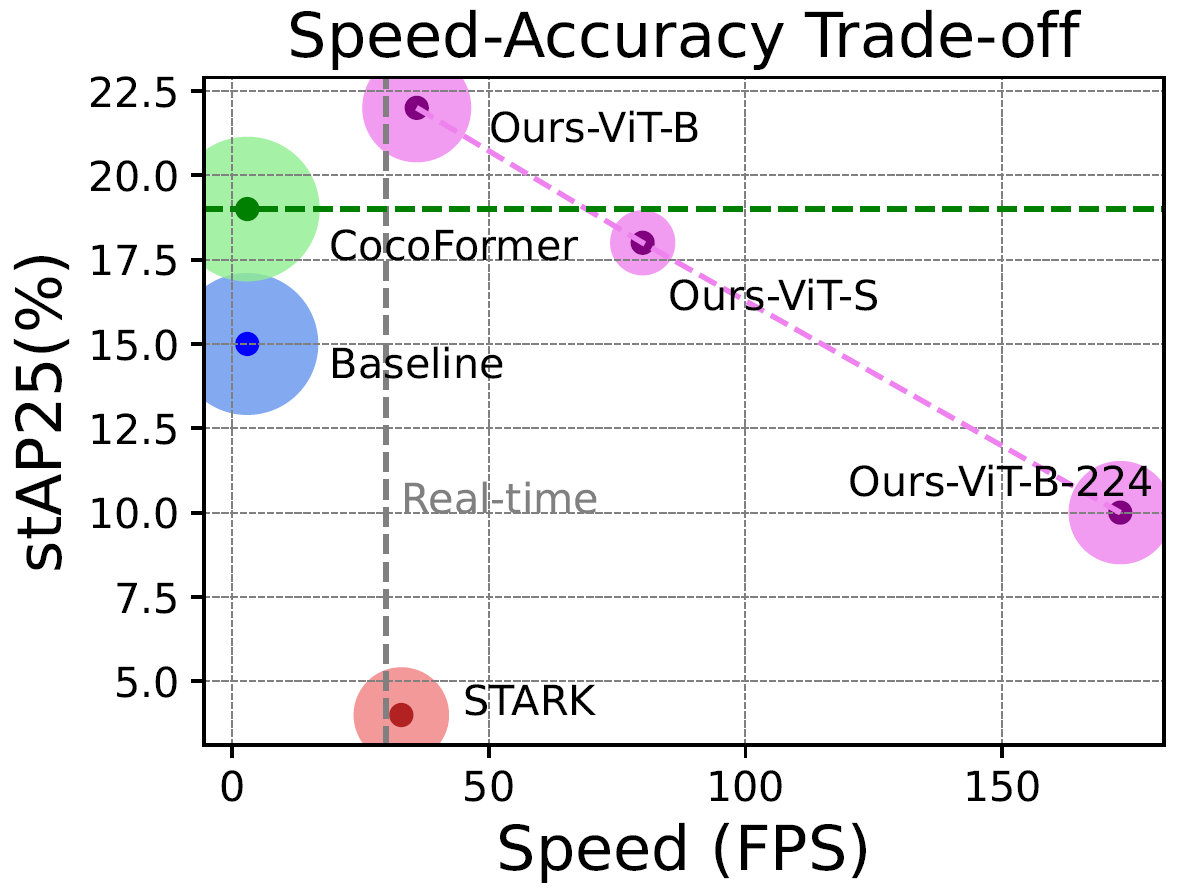

| Our model VQLoC achieve 20% performance gain compared with prior works and improves the inference speed 10x. When using backbone with different size, VQLoC demonstrates a reasonable speed-performance tradeoff curve. |

Visualization

|

We show identified query object response track with bounding boxes. |

Video

Citation

|